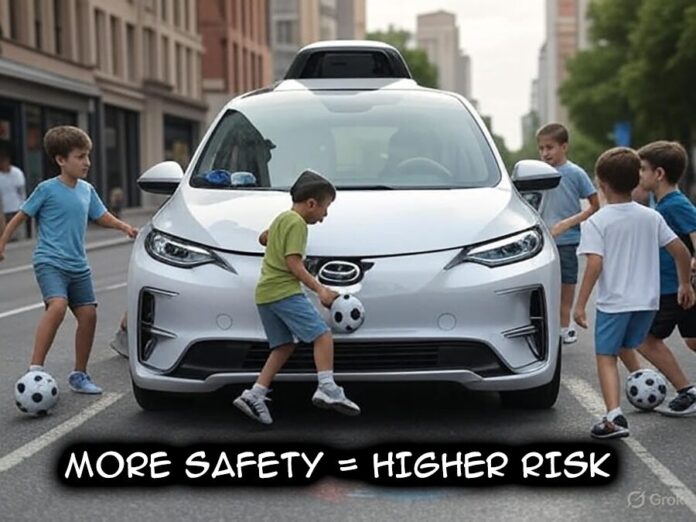

Malcolm Gladwell discovered something weird about self-driving cars. When he ran in front of a Waymo vehicle in Phoenix, it came to a stop and waited. When he circled it like a shark, it just sat there patiently. His conclusion? If every car drove itself, kids could play soccer on Manhattan’s busiest streets because the cars would simply stop and wait forever. The very feature designed to save lives – perfect safety programming – could break city traffic entirely.

This paradox extends far beyond autonomous vehicles. Years of research reveal an uncomfortable truth: when we make things too safe, people often behave in ways that cancel out the safety benefits. Sometimes they make things worse. It’s as if our brains contain a risk thermostat, constantly adjusting to maintain whatever level of danger feels right.

Gerald Wilde spent decades studying this phenomenon, which he called risk homeostasis theory. We all have a preferred level of risk. Like a temperature setting on a thermostat. When safety features make us feel overly secure, we unconsciously take greater risks to restore equilibrium. We’re not trying to be dangerous. Our brains simply evolved to balance safety and reward, and too much of either feels wrong.

The evidence surrounds us. Munich taxi drivers with anti-lock brakes tailgated more aggressively and crashed just as often as drivers without them. Seatbelt laws produced an even stranger outcome: while driver deaths decreased, **pedestrian fatalities increased**, leaving the total death toll essentially unchanged. Protected drivers felt safer. They drove more carelessly. The people outside their cars paid the price.

Sports demonstrates this beautifully. Rugby players suffer **2.8 times fewer concussions** than American football players, despite rugby featuring twice as many tackles per game. The difference? Helmets. Football’s protective gear creates what one rugby professional called a “feeling of invincibility” that encourages players to “tackle with their heads.” Remove the armor, and suddenly everyone becomes more careful.

Our need for danger isn’t a design flaw – it’s a feature carefully crafted by evolution. Teenage risk-taking, often dismissed as stupidity, actually serves crucial adaptive functions: developing social status, attracting mates, acquiring resources. The adolescent brain undergoes dramatic rewiring of dopaminergic systems that amplify reward-seeking behavior. Scientists now recognize this phase as “normative, biologically driven, and to some extent, inevitable.”

Consider what happened as we systematically removed risk from childhood. Between 1981 and 1997, children’s free play time plummeted by **25%**. During that same period, rates of childhood anxiety and depression exploded **five to eight-fold**. Today’s average child scores higher on clinical anxiety measures than psychiatric patients from the 1950s. We bubble-wrapped our kids and created an epidemic of fragility.

Children need what Norwegian researchers term “risky play” – climbing heights, using dangerous tools, exploring without supervision. This isn’t neglect but natural medicine. Kids who engage in physical risks show **significantly lower anxiety and depression symptoms** compared to their overprotected peers. Just as exposure therapy cures phobias by facing fears gradually, confronting manageable dangers teaches emotional regulation and resilience. Remove all risk, and you remove the very experiences that build psychological strength.

Human creativity shines brightest when systems try to control us too tightly. Soviet citizens, facing crushing censorship, developed an entire shadow vocabulary to communicate forbidden ideas. They couldn’t say “Stalin is a tyrant,” so they’d proclaim “our beloved leader’s wisdom knows no bounds” with just the right ironic inflection. Psychiatric hospitals used for political prisoners became “prophylactic centers.” The KGB transformed into “the neighbors” or “competent organs.” Censorship didn’t stop communication – it drove it underground, making it more subversive and harder to control.

This pattern repeats everywhere safety systems overreach. Social media bans certain words? Users write “pr0n” or “unalive” instead. Teenagers bypass parental controls faster than parents can install them. The human brain doesn’t submit to excessive safety measures. It innovates around them.

Automation presents particularly insidious challenges to human competence. Airline pilots who rely heavily on autopilot don’t just lose manual flying skills – they experience **significant degradation in cognitive abilities**. They lose situation awareness. Forget procedural steps. Panic when automation behaves unexpectedly. The 2009 Air France 447 crash, which killed 228 people, exemplified this: experienced pilots couldn’t recover when their automated systems acted in ways they didn’t anticipate.

GPS dependency offers quantifiable proof of cognitive decline. Heavy GPS users demonstrate **progressively worse spatial memory** over time, with brain imaging revealing a fundamental shift in how they navigate. The hippocampus, our mental mapmaker, atrophies while the caudate nucleus, responsible for habit formation, takes over. We stop thinking about where we are. We just follow orders. The technology designed to help us navigate the world is literally shrinking the part of our brain that understands it.

Educational technology shows similar patterns. Students who rely heavily on AI assistants scored **68% worse** on critical thinking assessments, with three-quarters admitting they’d lost the ability to analyze problems independently. When you outsource your thinking for too long, your brain forgets how to think. The tools meant to enhance our intelligence may be undermining it.

Financial markets provide perhaps the most spectacular example of safety mechanisms creating the very disasters they’re meant to prevent. Banks deemed “too big to fail” took increasingly **reckless risks** knowing taxpayers would bear the consequences. Their sophisticated Value-at-Risk models bred false confidence; simple statistical approaches would have predicted the 2008 crisis more accurately than banks’ complex internal systems. The safety net became a moral hazard, encouraging the exact behavior it was designed to prevent.

Healthcare’s digital transformation tells a similar story. Electronic health records, implemented to reduce medical errors, forced doctors to spend **twice as much time** documenting as interacting with patients. Information overload actually increased mistake rates. The human connection that helps physicians spot subtle problems – a worried glance, a hesitant tone – gets lost behind screens. The cure has become a new disease.

Even well-intentioned content moderation backfires. AI systems tasked with removing harmful social media content can’t grasp context – they delete breast cancer awareness posts for “nudity” while sophisticated hate speech slips through. Users adapt by developing increasingly clever circumventions, creating an arms race where harmful content becomes harder to detect. The safety system itself drives evolution of the threat.

Cultural contexts profoundly shape how we perceive and respond to risk. Chinese investors take **significantly larger financial gambles** than Americans, trusting extended family networks to provide support if ventures fail. Yet these same risk-takers meticulously avoid any behavior that might disrupt social harmony. Scandinavian parents leave infants to nap outside in freezing temperatures, viewing cold exposure as healthy – a practice that would trigger child protective services in America. Indian children enjoy hours of unsupervised play while American kids shuttle between organized activities. The research on which approach produces more creative, resilient adults? It doesn’t favor the bubble-wrapped ones.

Trust in AI varies dramatically across cultures too. Citizens of Brazil, India, and China express **far greater confidence** in AI systems than their American or European counterparts. Curiously, in China and South Korea, older generations trust AI more than younger ones – reversing the pattern seen everywhere else. These differences suggest there’s no universal “right” level of safety. Context matters profoundly.

The ancient Stoics understood something we’re rediscovering through modern science. Ryan Holiday’s “The Obstacle Is the Way” popularized their core insight: what blocks our path often becomes the path itself. Difficulties don’t just test us – they transform us. This isn’t motivational fluff but biological reality.

Nassim Taleb coined the term “antifragility” to describe systems that grow stronger under stress. Muscles need resistance. Immune systems require pathogens. Bones need impact. Remove these stressors and the systems weaken. Human psychology follows identical principles. Research confirms that people exposed to **moderate adversity** demonstrate superior stress responses compared to those facing either no challenges or extreme trauma.

This operates through hormesis – the biological principle where small doses stimulate while large doses harm. Studies reveal that social defeat stress actually **enhances learning and memory** in young subjects when properly calibrated. Our ancestors who could learn from manageable failures survived and reproduced. Those who couldn’t adapt didn’t. Evolution built us to need challenges.

Modern life creates a profound evolutionary mismatch. Our threat-detection systems evolved for immediate, concrete dangers – predators, cliffs, poisonous plants. Today’s risks arrive as abstract statistics and delayed consequences. Our ancient alarm systems fire constantly without appropriate outlets for action. The result? Epidemic anxiety disconnected from real threats.

Technology increasingly makes decisions for us, creating what philosopher Shannon Vallor calls “moral deskilling.” When AI systems handle ethical choices, humans lose practice with moral reasoning. Military drone operators rely heavily on algorithmic recommendations for life-and-death decisions. Just as pilots lose flying skills through automation, we risk losing our capacity for ethical deliberation. Smart home users report feeling “trained” by their devices, with technology becoming “the enemy” when it conflicts with human preferences. Full automation reduces users’ sense of competence and mastery. We need manual control to maintain our humanity.

These individual effects cascade into societal consequences. Cultures prioritizing absolute safety show **reduced innovation** and economic dynamism. Shared challenges that historically bound communities together disappear. As online and offline experiences merge into one sanitized, predictable flow, we risk losing the friction that sparks creativity and connection.

Research consistently shows behavioral adaptation offsets **10-50%** of intended safety improvements. Complete risk compensation remains rare, but partial offsets prove significant enough to matter. More troublingly, psychological costs of excessive safety may outweigh physical benefits, particularly for developing minds.

The solution isn’t abandoning safety measures but designing them with human nature in mind. Dutch traffic engineer Hans Monderman demonstrated this brilliantly by removing all signals and signs from intersections. Accidents dropped **40%** while traffic flow improved. Drivers, suddenly uncertain, paid attention. By making people feel less safe, the design made everyone behave more safely. Sometimes optimal safety emerges from carefully calibrated uncertainty.

This principle extends to AI design. We need systems that periodically require human input to maintain skills. That explain their reasoning so we understand what’s happening. That enhance rather than replace human capabilities. That adapt to different cultural risk preferences. That provide age-appropriate challenges rather than universal padding.

We’re creating a generation poorly equipped for life’s inevitable uncertainties. Children who’ve never navigated risk independently crumble when facing adult challenges. Students dependent on AI for analysis lack critical thinking when systems fail. Drivers who’ve only known autonomous vehicles become helpless in emergencies requiring manual control.

Humans require a delicate balance between safety and challenge, protection and agency, certainty and mystery. Our evolved psychology needs manageable risks to develop properly. Remove all danger, uncertainty, and difficulty from life, and we don’t create paradise. We create fragility.

Malcolm Gladwell’s driverless car insight encapsulates this paradox perfectly. Technology designed to eliminate accidents through flawless safety might fail precisely because it succeeds too well. Pedestrians, no longer fearing consequences, could treat streets as playgrounds. The very feature meant to protect us – perfect adherence to safety rules – could paralyze the systems we depend on.

This isn’t an argument against safety or AI. It’s a recognition that human flourishing requires more than protection from harm. We need systems that shield us from genuine dangers while preserving the manageable challenges essential for growth. The goal shouldn’t be eliminating all risk but optimizing the delicate balance where humans thrive.

As we race toward ever-safer AI systems, we must ask not merely “how can we make this safer?” but “what might we lose if we succeed?” The answer may determine whether future generations develop the resilience, creativity, and agency needed to navigate an uncertain world – or whether, in our quest to protect them from all harm, we inadvertently create the greatest harm of all.

References:

https://www.inc.com/chloe-aiello/malcolm-gladwell-says-driverless-cars-are-too-safe-heres-why/91208736

https://www.researchgate.net/publication/13619289_Risk_Homeostasis_Theory_An_Overview

https://en.wikipedia.org/wiki/Risk_compensation

https://libertyrugby.org/2017/01/10/dangers-football-safety-equipment

https://pmc.ncbi.nlm.nih.gov/articles/PMC9624300

https://www.frontiersin.org/articles/10.3389/fpsyg.2021.694134/full

https://files.eric.ed.gov/fulltext/EJ985541.pdf

https://www.child-encyclopedia.com/outdoor-play/according-experts/risky-play-and-mental-health

https://www.child-encyclopedia.com/outdoor-play/according-experts/outdoor-risky-play

https://www.nature.com/articles/s41598-020-62877-0

https://slejournal.springeropen.com/articles/10.1186/s40561-024-00316-7

https://www.sciencedirect.com/science/article/abs/pii/S0738399116303263

https://pmc.ncbi.nlm.nih.gov/articles/PMC9422765

https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2024.1382693/full

https://en.wikipedia.org/wiki/Antifragility

https://www.nature.com/articles/s41599-023-02413-3

https://journals.sagepub.com/doi/10.1177/09567976231160098

https://en.wikipedia.org/wiki/Evolutionary_mismatch

https://dl.acm.org/doi/fullHtml/10.1145/3411764.3445058

https://en.m.wikipedia.org/wiki/Risk_compensation